IBM's research reveals how security is often overlooked in GenAI projects

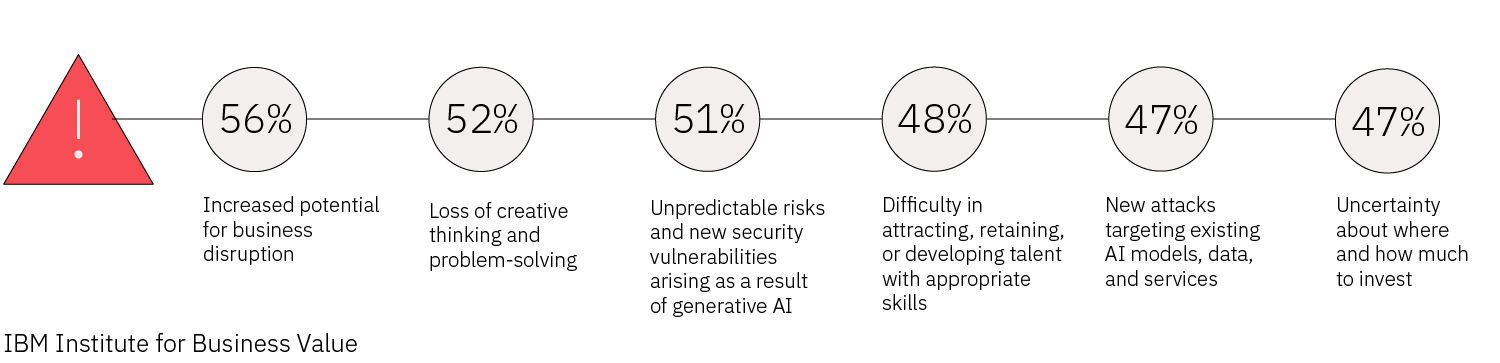

A recent IBM survey reveals a worrying gap in AI security practices. While 82% of C-suite executives agree that trustworthy and secure AI is crucial for their organizations, only 24% have integrated security into their generative AI (GenAI) projects. Despite acknowledging the risks, a significant majority (nearly 70%) prioritize innovation over security.

The study highlights that while the AI threat landscape is still evolving, current concerns include the misuse of AI tools like ChatGPT for creating phishing content and deepfake audio. IBM’s X-Force researchers predict that as AI technology matures, cybercriminals will increasingly target these systems. Furthermore, improper security measures can lead to data leaks and unauthorized use of GenAI tools within organizations.

To address these issues, IBM is advocating for a structured approach to securing GenAI, which includes protecting data, scanning for vulnerabilities in AI models, and enforcing stringent access controls. Their new AI testing service aims to bolster security through red teaming.

Securing AI from the outset is crucial, especially as GenAI becomes deeply embedded in business operations, processing sensitive data that offers competitive advantages. Failing to secure these assets could result in significant losses, both financially and in terms of intellectual propertynswer to the question

Source : IBM study shows security for GenAI projects is an afterthought | TechTarget